Nuance speech processing

Nuance provides speech resources for applications. A typical IVR application makes recognition and/or text-to-speech requests to a voice browser. The browser interprets the requests and uses an MRCP client to relay them to the Nuance Speech Server, which passes the requests on to the appropriate speech resource. The results return along the same pathway. It is also possible to develop applications on any other platform, as long as the platform ultimately relays all requests via an MRCP client.

This topic provides an overview of the entire speech environment. Primarily, it addresses how a voice application controls speech resources through an MRCP client. The voice browser developer implements MRCP features from two perspectives, typically, requirements of VoiceXML applications (which the browser must interpret), and requirements of the platform (which the browser must define).

Tip: For a discussion of how you can support multiple companies or applications in a single deployment, see Hosted environments.

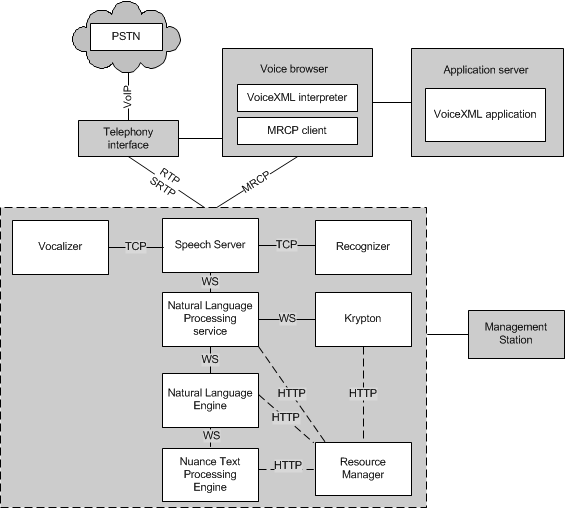

The following diagram illustrates the main components of a typical IVR speech processing system that incorporates Nuance speech products. The figure shows the Speech Suite software used with a VoiceXML browser with a VoIP telephony gateway to the public-switched telephone network (PSTN).

The voice browser consists of the VoiceXML interpreter context and the VoiceXML interpreter. The VoiceXML interpreter context is responsible for detecting an incoming call, acquiring the initial VoiceXML document, and answering the call, while the VoiceXML interpreter conducts the dialog after the call is answered.

The voice browser requests VoiceXML documents from an HTTP application server, which provides VoiceXML documents in reply, which are in turn processed by the VoiceXML interpreter. The browser also processes events generated in response to user actions (for example, spoken or character input received) or system status changes (for example, timer expiration).

The MRCP client manages speech sessions through the Nuance Speech Server, receives application requests from the browser, translates requests into standard MRCP messages, and returns results to the application through the browser.

Note: Nuance speech products also support other voice platforms that don't use traditional VoiceXML or telephony. Any platform that provides a compatible MRCP client can interface with Nuance Speech Suite services.

These resources run on several different hosts, or can run as separate processes on a single host for testing or development purposes.

The diagram shows the protocols that speech system components use:

- RTP (Real-Time Protocol) or SRTP (Secure Real-Time Protocol) between the telephony interface and Speech Server.

- MRCPv2 (SIP or TCP) between the voice browser (VoiceXML interpreter/MRCP client) and Speech Server. (MRCPv1 is not supported for Dragon Voice components.)

- TCP (Transmission Control Protocol) between Speech Server and both Nuance Recognizer and Nuance Vocalizer. These 3 components always communicate with TCP. There's no mechanism to secure the communications (and no security benefit in doing so).

- WebSocket (WS) protocol between Speech Server and Dragon Voice components: Natural Language Processing service, Krypton recognition engine, Natural Language Engine (NLE), and Nuance Text Processing Engine (NTpE) instances.

- HTTP protocol between Dragon Voice components and Nuance Resource Manager.

- If the voice browser uses MRCPv1 (not supported for Dragon Voice components), audio uses RTSP (Real-Time Streaming Protocol) and signaling uses RTP (Real-Time Protocol). (MRCPv2 uses SIP or TCP for signaling.)

Nuance Management Station uses the watcher service to monitor and manage the components inside the dashed border.

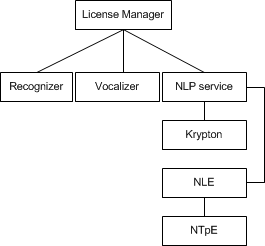

Nuance License Manager (not shown above) can be installed on a dedicated host or on a host with speech components. All communication with License Manager is with TCP. The Natural Language Processing service is responsible for checking out licenses on behalf of other Dragon Voice components.

You can control Nuance speech-processing systems in a variety of ways. Each component of a system has its own set of parameters, and you can control some parameters in more than one place. See Controlling speech recognition and TTS.